Standardized exams measure intrinsic ability, not racial or socioeconomic privilege

Recently, the usage of standardized testing has fallen under deep scrutiny in America:

- An elite magnet high school in Virginia, Thomas Jefferson High School for Science and Technology, recently eliminated a standardized test score requirement for applicants, in favor of grade-point averages and other “holistic” criteria.

- The entire University of California system has decided to completely forego usage of standardized tests like the SAT or the ACT for college applicants.

- The American Bar Association is pushing for law schools to go LSAT-optional.

It is straightforward to find many more examples of this trend at all levels of the educational hierarchy.

There are typically several motivations given for these changes:

- Intellectual ability may not be a coherent or quantifiable concept in the first place

- Standardized exams may not capture the full range of students’ intellectual abilities

- Socioeconomic adversity or racial discrimination may lead to certain subpopulations having depressed standardized exam scores relative to their intrinsic ability

- Families and cultures that place high value on exam preparation may lead to certain subpopulations having overly high standardized exam scores relative to their intrinsic ability

Typically, the disadvantaged groups (exam scores underestimate ability) are thought to be poor, Black, Hispanic, or Native American students, and the advantaged groups (exam scores overestimate ability) are thought to be Asian-Americans.

These motivations are wrong.

However, it is important to be clear about why they are wrong. They are not wrong because I disagree with them on an ideological basis; rather, they are wrong because they conflict with the existing scientific literature on cognitive ability, the heritability of intelligence, and standardized testing. They are empirically wrong and run against decades’ worth of detailed studies spanning the fields of psychology, sociology, education, and modern genetics.

In this post, I outline a clear, step-by-step argument that lays out a strong case for the pro-standardized testing viewpoint. I establish the following points:

- Intelligence is measured by a single factor, g

- The majority of differences in intelligence are genetic

- Common objections to heritability estimates are invalid

- Standardized test scores are good measures of intelligence

- Test scores are unaffected by parental income or education

- Test preparation has a minimal effect on test scores

- Asian culture does not explain Asian-American exam outperformance

In sum, I supply a succinct argument that rebuts all of the typical arguments in favor of eliminating standardized exams. Upon a comprehensive review of the literature, I find that cognitive ability is a coherent, innate, and heritable trait; that standardized exams are good measures of cognitive ability, and are largely unaffected by parental income or education; finally, that exam scores are not meaningfully affected by student preparation, motivation, educational quality, or parental pressure, and certainly not to a degree necessary to explain group differences in performance.

The conclusion is that standardized exams are an accurate and effective measurement of innate cognitive ability. Their usage does not introduce or exacerbate preexisting societal biases or privileges, and their elimination is tantamount to an explicit policy of discrimination against higher-performing groups in favor of lower-performing groups. That is to say: anti-Asian discrimination.

I would like to preemptively thank Crémieux for a number of useful Twitter threads which I extensively consulted while compiling this post. Much of the hard labor is his; I am but a mere synthesizer of preexisting content. The same gratitude is, naturally, extended to all others whose work I have cited in this post.

Intelligence is measured by a single factor, g

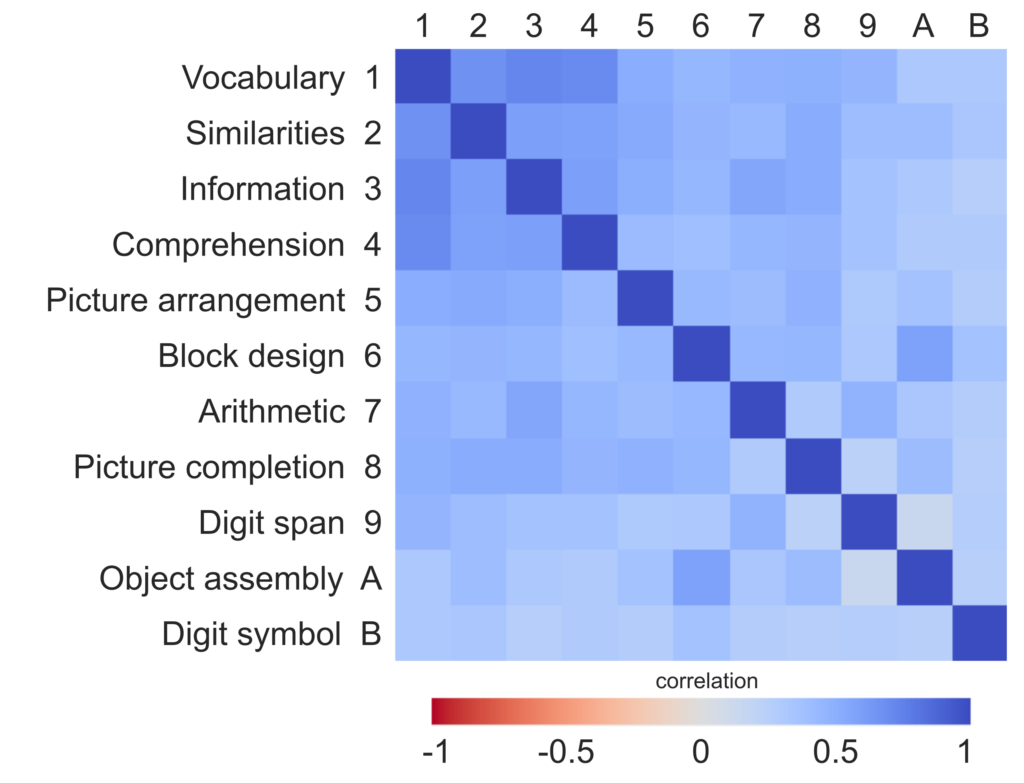

First, it’s important to understand how cognitive ability is structured. As early as 1927, it was observed by Spearman (in The Abilities of Man) that all tests of cognitive abilities were highly positively correlated with each other. For example:

The common factor which explains the majority of the variance in the results for any given test is termed g, or the g factor, denoting “general intelligence.” The validity of this construct has been demonstrated many times over the course of the past century. For example, Johnson et al. (2008), working to replicate results from prior work in 2004, continue to find support for “the existence of a unitary higher-level general intelligence construct whose measurement is not dependent on the specific abilities assessed.” The validity of modeling cognitive ability as derived from a unitary g factor has also been confirmed in modern cohort studies such as Panizzon et al. (2014). Attempts to construct models of human cognitive ability either produce multi-factor models which fail to have the same predictive ability as g (such as Gardner’s “multiple intelligences,” which have long fallen out of favor) or, ultimately, recapitulate a hierarchical model where a unitary factor, analogous to g, stands at the top of the hierarchy. For a more detailed review of the background literature on g, the reader is advised to consult Stuart Ritchie’s Intelligence: All That Matters.

It is worth noting that the existence of g is not obvious a priori. For athletics, for instance, there is no intuitively apparent “a factor” which explains the majority of the variation in all domains of athleticism. While many sports do end up benefiting from the same traits, in certain cases, different types of athletic ability may be anticorrelated: for instance, the specific body composition and training required to be an elite runner will typically disadvantage someone in shotput or bodybuilding. However, when it comes to cognitive ability, no analogous tradeoffs are known.

We will later discuss the relationship between g and exam scores. Insofar as the two are correlated, a direct causal relationship between intelligence and life outcomes (income, wealth, physical health, overall life satisfaction) follows as a direct consequence. However, the reader should note that there is a rich literature demonstrating the relevance of the abstract construct of g to everyday life, ranging from classic work by Gottfredson (1997) to modern replications such as those by Zisman and Ganzach (2022). Differences in g are not merely statistical artifacts of purely academic interest; they reflect real differences between individuals with substantial predictive value for numerous aspects of adult life.

Popular critiques of g, or of the science of cognitive ability in general, surface with some regularity. The criticisms are typically flawed, typified by a lack of theoretical understanding as well as a deep ignorance of the empirical literature. While the purpose of this report is not to supply a history of the scientific debate on g; nevertheless, because they are cited with such great frequency, they are worth mentioning in passing:

- Gould (1981) formulated a number of wide-ranging criticisms of classical research on cognitive ability. A number of central empirical claims made by Gould regarding a classic lead-shot study by Morton were demonstrated in Lewis et al. (2011) to themselves be “poorly supported or falsified.” Similarly, a reanalysis by Warne et al. (2018) concluded that Gould’s negative analysis of the Army Beta intelligence test “mischaracterized” critical aspects of the data under consideration. Finally, many of the broader aspects of Gould’s work (for example, his examination of the historical literature) were refuted by Bartholomew (2014).

- Shalizi (2007) (archive) advances a number of superficially technical criticisms. For example, he claims that the Perron–Frobenius theorem guarantees the extraction of a general factor from any test battery; however, there is no guarantee, before looking at the actual correlations, that all test scores are positively correlated with each other, nor any guarantee that the first latent factor explains as much of the shared variance as does g with cognitive batteries. Shalizi also ignores the long history of failed attempts to find assessments of cognitive ability not largely explained by g. Comprehensives responses to Shalizi were given by by Dalliard (2013a) (archive) and Dalliard (2013b) (archive).

- Taleb (2019) (archive) supplies a very discursive and imprecise critique. The claims made are too broad-ranging and vague to properly address in this article but have been thoroughly re-analyzed by a number of public rebuttals (1, 2).

Beyond their historical significance in the public discourse surrounding cognitive ability and g, responses to poorly formulated critiques of g themselves serve as excellent primers to the field of intelligence research, as they thoroughly address a number of intuitive or oft-cited misconceptions.

The majority of differences in intelligence are genetic

So far, we have said nothing about the origin of cognitive ability. The existence of g is compatible with fully-hereditarian models, where cognitive ability is fixed at birth, and with fully-environmental models, where all humans begin with the same “blank slate” at birth and develop differing levels of cognitive abilities due to their parental, etc. environments, and, of course, with anything in between these two extremes.

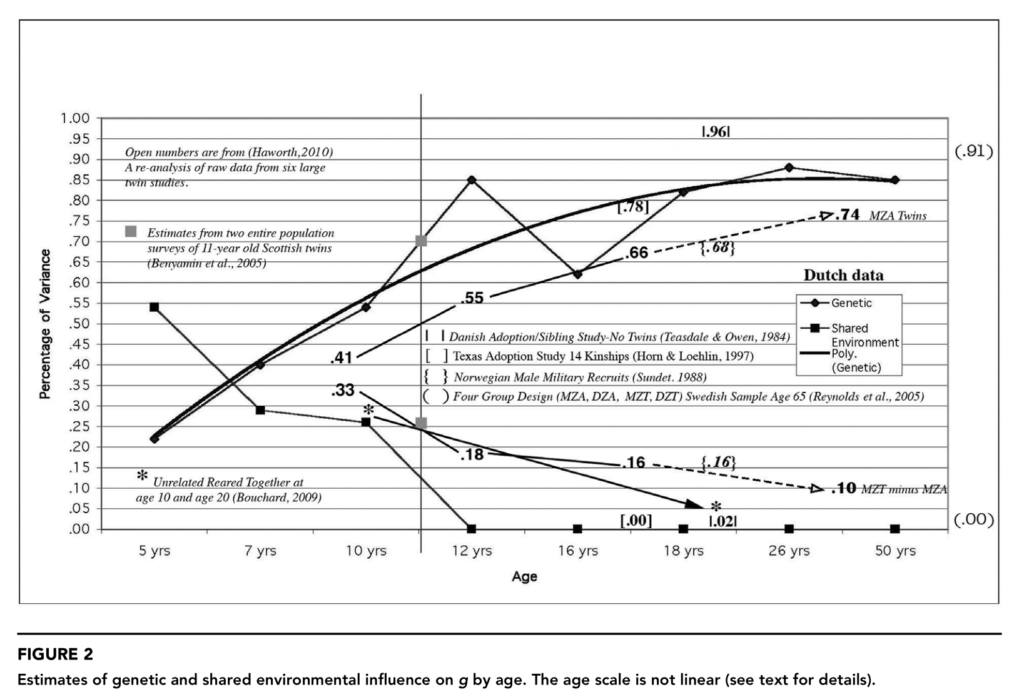

The place where traits fall on this spectrum is represented by heritability, defined as the amount of variance in the trait in question explained by genetic variation between individuals. The traditional gold-standard for measuring heritability ever since the advent of modern genetics has been the twin study, which uses genetic similarities between twins reared together or by different families to cleanly separate out genetic vs. environmental influences on traits. A review conducted by Bouchard (2013) summarized a number of twin studies to show that the heritability of g increases over age to a terminal value of 0.8 or above in adulthood:

This is quite a significant finding. First, it suggests that in adulthood, cognitive ability is at least 80% predictable from a given person’s genetic makeup. (Importantly, we should note that this value is actually an underestimate of the true heritability, due to noise in measurement of g as well as the presence of assortative mating, which biases twin-study estimates of heritability downward.) Second, it shows that while cognitive abilities are more environmentally dependent in childhood, the environmental influences tend to “wash out” as people age and regress toward their “genetic ability,” roughly speaking.

Results from other analytic methods arrive at the same results:

- For example, a more sophisticated twin study conducted by Vinkhuysen et al. (2012), which uses detailed family background to adjust for assortative mating, finds a total genetic heritability of 82% for intelligence.

- For those who object to twin studies in general, Schwabe, Janss, and van den Berg (2017) uses nearly one million data points from the same Dutch birth cohort with detailed pedigree information to estimate a heritability of 85% for educational achievement.

While there exist some twin studies which yield lower heritability estimates, their results are often indicative of measurement errors or other systematic flaws. For example, Willoughby et al. (2021) produce a genetic heritability of 40% for ICAR-16 cognitive scores; however, the effect of parental environment is also estimated at 1% (which is much lower than almost all other empirical findings, as well as being highly implausible), suggesting that a great deal of “unmeasured heritability” is being inaccurately bundled into the 50% contribution of non-shared environment. (One might even note that studies which are obvious underestimates such as Willoughby et al. (2021) are actually useful as very tight lower bounds on the true values!) A magisterial overview of the history and broader landscape of twin studies of intelligence and its heritability is given in Lee (2010) (itself a critical review of Nisbett (2009), which cherry-picks twin studies in order to inaccurately conclude that the true heritability of g is low).

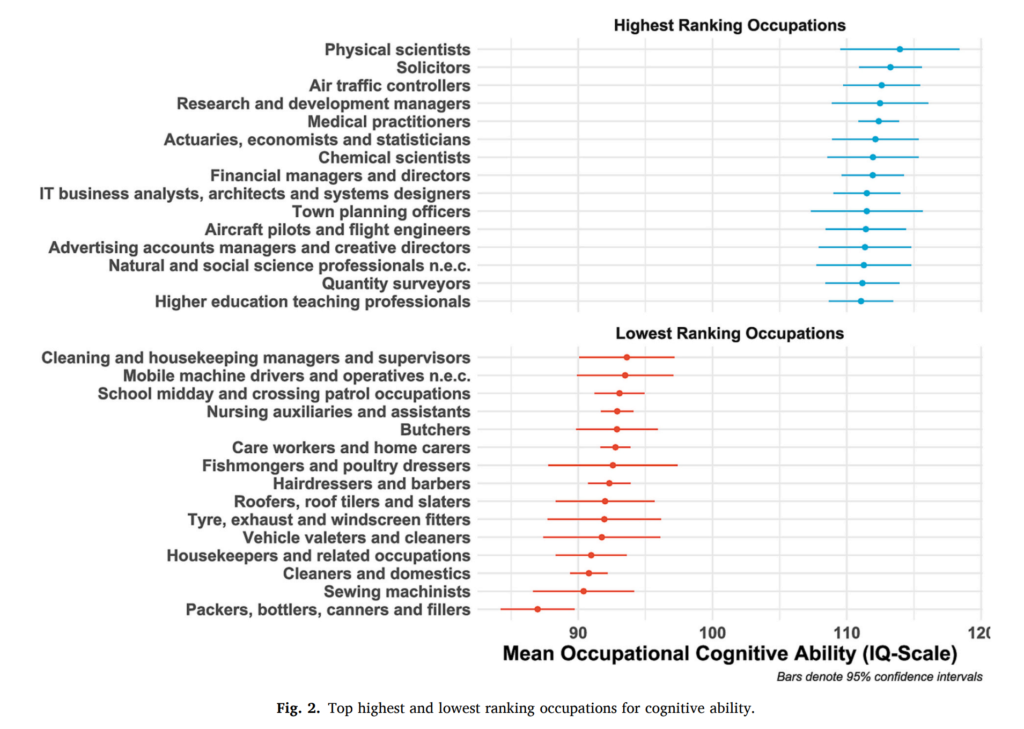

Overall, multiple decades’ worth of studies, including both twin studies and cross-sectional analyses, convincingly demonstrate that well over 80% of individual variation in cognitive ability (g) is accounted for by genetic variation, at least in modern, first-world, developed countries. The reader should note that this is a truly remarkable result: it means that an individual’s genome, which is fixed at birth, can be used to predict, with relatively high accuracy, a factor (g) which has found to affect life outcomes as far-ranging as income or even health and longevity! For instance, Wolfram (2023) demonstrates that occupations are highly stratified with respect to intelligence:

In conjunction with the high heritability of g estimated by multiple studies, we may conclude that one can largely determine, at the moment of conception, the professions most suitable for a given child. (There will still be a great deal of variability in specific preferences and abilities; however, the narrowing of the space of possibilities is nevertheless very dramatic.) Of course, this report is not intended to be a review of the total predictive abilities of g, so we will not spend too much time dwelling on the literature relating measurements of g to life outcomes; we merely bring up the existence of such work so that the reader may gain some appreciation for the practical significance of the heritability of human intelligence and, if so they so desire, explore such tangents at their leisure.

Common objections to heritability estimates are invalid

(The first-time reader is advised to skip this section if they are relatively unfamiliar with the topic. It is placed here for narrative consistency, but is slightly more nitpicky in nature.)

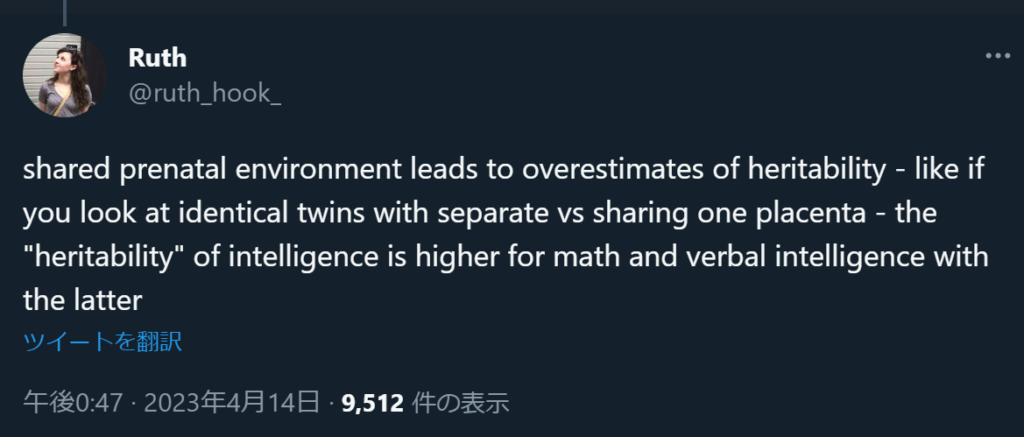

Three common objections exist to the above heritability estimates. The first is that shared prenatal environment inflates twin-study estimates of heritability:

This rejoinder is of course simply invalid for estimates of heritability which are not derived from twin studies, such as the estimate from Schwabe, Janss, and van den Berg (2017). However, shared prenatal environment in general is also not known to have any strong effect on cognitive ability. For example, a comprehensive review by van Beijsterveldt et al. (2016) finds that “the influence on the MZ twin correlation of the intrauterine prenatal environment, as measured by sharing a chorion type, is small and limited to a few phenotypes [implying] that the assumption of equal prenatal environment of mono- and DC MZ twins, which characterizes the classical twin design, is largely tenable.” Indeed, if womb effects increased similarity between mother and child, we would expect this effect to show up in studies of adoptees; however, per Loehlin et al. (2021), adoptees’ measured IQs (in the Texas and Colorado Adoption Projects) were correlated in similar degrees to both maternal and paternal IQ at ages 6 and 16. Overall, the claim that shared prenatal environment leads to overestimated heritability is a testable empirical claim, and the results of such tests show conclusively that it is a false claim.

Second, there is the question of “missing heritability.” For example, GCTA estimates of the heritability of intelligence seem to find much lower heritability values compared to those reported above. Similarly, polygenic scores (PGS) constructed to predict either intelligence or educational attainment only manage to explain a very small fraction of the variance in such traits.

The explanation, of course, is that both of these estimates (GCTA and PGS) are really weak lower bounds for heritability. (While PGS estimates can be cleanly interpreted as lower bounds, GCTA estimates are subject to a variety of biases themselves, and are not necessarily straightforwardly understandable as strict lower bounds.) The first only estimates the variability attributable to common variants, while the latter relies on the explicit identification of genetic variants which predict cognitive ability.

We can reason about the applicability of GCTA estimates through analogy to other human traits. For example, Yang et al. (2011) first provided a GCTA estimate of the heritability of human height at ~0.5. A decade later, Yengo et al. (2022) supplied, again, an estimate of ~0.5 heritability based on common variants. At the same time, however, whole genome estimates of the heritability of human height are able to recover the same values (~0.7) given by pedigree-based heritability, such as in Wainschtein et al. (2019). In the specific case of height, estimates of heritability based on common variants, while stable over the course of years, were clearly underestimates of the actual heritability, which includes contributions from a large number of rare variants. Similarly, with cognitive ability, the “heritability gap” is likely to be due to the contribution of rare variants not assessed by the genotyping arrays used for GCTA-based estimates. (Some of the difference may also result from the usage of primarily European data for imputation, which does not generalize perfectly to non-European populations.)

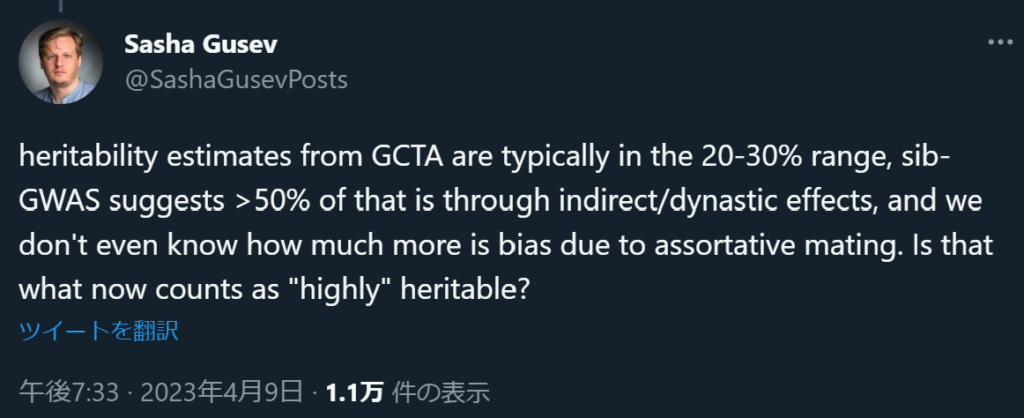

Gusev further claims that studies such as Howe et al. (2022) or Young et al. (2022) demonstrate that estimates of the heritability of g (either via traditional GCTA or via the construction of polygenic scores) are in fact upper bounds on the heritability of g. This argument is prima facie absurd: even accepting these studies’ findings that traditional heritability estimates for g are overestimated due to assortative mating and shared environment, the presence of an upward bias does not turn an estimate into an upper bound! The first of the two papers, for example, estimates a much lower heritability for cognitive ability (n = 27,638) than for height (n = 149,174), and also finds that the heritability of cognitive ability is “deflated” by a greater degree than height after indirect genetic effects are taken into account. However, especially in light of the drastic difference in sample size, as well as the inherently higher measurement error in simple tests of cognition compared to measurements of height, this simply does not logically imply that the heritability estimates for g are upper bounds. It merely implies, exactly as it claims to find, that the estimates are potentially biased upward due to biometric interactions; to conflate that with the unadjusted estimates forming an upper bound is, pure and simple, an error in logical reasoning. The identification of such interactions is also fully consistent with the results of twin studies; it would be surprising if they were not found within cross-sectional genomic data, and their existence does not change the fact that as cohort data improves, estimates of heritability continue to march upward.

As for PGS estimates of heritability, the genetic architecture of intelligence, and other complex human traits, is typically such that thousands, if not tens of thousands, of genetic variants affect the final trait value, as described in Hsu (2014). As data quality improves, the PGS-derived heritability is also expected to increase, much as it has done for height and a number of other complex phenotypic traits throughout the 2010s; moreover, PGS scores for both cognitive ability and educational attainment continue to improve with the collection of greater quantities of biobank data, with little sign of a “plateau” indicating that we have reached anything close to the best possible PGS. Even setting aside the lack of sufficient data to train a high-quality polygenic model for g, it is purely nonsensical to claim, ever, that a polygenic score forms anything but a lower bound on the true heritability of the trait it is predicting; it follows from the definition of what a polygenic score is in the first place that if you can explicitly cognitive ability with a PGS to some degree d, the true heritability must be greater than d. Overall, vague allusions to GCTA/PGS estimates of heritability, and especially to the deflation of heritability estimates as a rationale for interpreting them upper (rather than just lower) bounds, are logically incoherent at best and disingenuous at worst.

The final objection is that heritability is depressed by adverse environmental circumstances. For example, if all members of a population are severely malnourished and mistreated, it is likely that they will all have extremely low cognitive abilities in adulthood regardless of genetic makeup. The contention is therefore that if we were to examine less privileged groups, which face adverse circumstances in childhood, we would find lower heritability values. Therefore, the values of >80% arrived at by twin studies in predominantly white subpopulations of developed nations may effectively overstate the heritability of intelligence in other groups.

More generally, this is known as the Scarr–Rowe hypothesis: if true, groups which face some sort of adversity in early life (poverty, discrimination, and so on) should result in lower heritability for cognitive ability. Alternatively, if we observe that heritability differs across groups, that ought to constitute evidence that the group with lower heritability faced greater overall adversity in early childhood. The extent to which measured heritability actually differs across different subsections of the population is, of course, an empirically answerable question.

While this hypothesis is likely true to some nonzero extent in principle, we should note that even among a sub-Saharan population mired in extreme poverty, Hur and Bates (2019) were able to estimate a heritability of ~35% for intelligence. It is difficult to imagine that any reasonable subpopulation of a first-world country faces circumstances as adverse as those of poor sub-Saharan teenagers; correspondingly, 35% ought to be taken as a fairly hard lower bound for how far environmental deprivation can reduce the heritability of intelligence. (However, one may wish to take these results with a grain of salt, given that no replication studies in comparable populations presently exist.) In de Rooij et al. (2010), the authors found that even a 5-month-long famine at the end of World War II was barely associated with reduced cognitive performance in later life, and only in one of four tests of cognitive ability they tried (nonsignificant results for the others). Finally, Fuerst (2014) (later systematically confirmed by Pesta et al. (2020) and Pesta et al. (2023)) found that heritability of cognitive ability is essentially the same across different ethnic subgroups in the United States. Since socioeconomic status and (presumed) exposure to racial discrimination are highly variable across different American ethnicities, this result suggests that neither of those two factors significantly affects the heritability of cognitive ability, at least within the milieu of modern Western society.

One particular study, Turkheimer et al. (2003), is often cited in support of a lower heritability of g among low-income substrates of American society. However, this is a problematic piece of evidence. First, heritability estimates were only provided for 7-year-olds; because we know that environmental effects asymptotically “wash away” with age, this is highly unreflective of heritability estimates for late adolescence and early adulthood, which is what we are most interested in. Second, larger follow-up studies on older cohorts such as Rask-Andersen et al. (2021) often fail to reproduce a clear association between lower socioeconomic status and lower heritability of g. The most likely explanation is that Turkheimer et al. (2003) is either purely artefactual, a reflection of transiently depressed heritability in early childhood, or a combination of the two. (The reader may also be interested to know that a re-analysis of the same cohort data used by Turkheimer et al., namely by Beaver et al. (2013), found no heritability differences by race, suggesting that if one accepted wholesale the results of Turkheimer et al., one ought to also consequently accept the conclusion that even among economically disprivileged families, where one might expect to find the most severe levels of racial discrimination and prejudice, racial ancestry does not appear to have any discernible differential impact upon the cognitive abilities of different ethnic subgroups.)

Overall, we find that objections to a high heritability estimate of g are, generally, lacking in substance. In some cases, they are simply false claims; in other cases, they seem to reflect a fundamental misunderstanding of the methods under question and the results cited. The one trait that these criticisms do share in common, however, is that they all have the flavor of being “isolated demands for rigor.” Seldom are claims for the heritability of other complex human traits, such as height, disease risk, or psychological attributes questioned to such depth; at any given point in time, given sufficient effort and motivation, it would likely have been possible to identify both theoretical and empirical objections to the heritability of height. Yet it is now well accepted that height is well over 80% heritable in a modern Western country with adequate nutrition—an estimate which has steadily ratcheted upward over the course of the 21st century.

One final note: critics of heritability estimates of g often claim that “hereditarians” have erred in their predictions (of, say, the eventual potential to use polygenic scores to estimate g to a precision equal to its broad-sense heritability). Such claims are then used to support the notion that cognitive ability is too complex or confounded of a trait, and that further research into “hereditarian” directions are therefore fruitless. This argument is self-contradictory: knowing that we have an order of magnitude less data for cognitive ability than we do for other complex traits like height or cardiovascular disease risk, and that existing measurements of g in cohort data are often noisy and range-bound, the fact that we have failed to generate high-quality genetic predictors of g (or educational attainment and so on) are evidence that we should collect more, and better, datasets, not evidence that we should stop collecting and analyzing such data, or that doing so is in any sense fruitless or doomed to failure.

Standardized test scores are good measures of intelligence

We understand now that intelligence is summarized by a single factor, g, which is highly heritable (most variation is causally explained on the genetic level). However, we have yet to bring in standardized testing into the discussion. Are SAT scores actually associated with g?

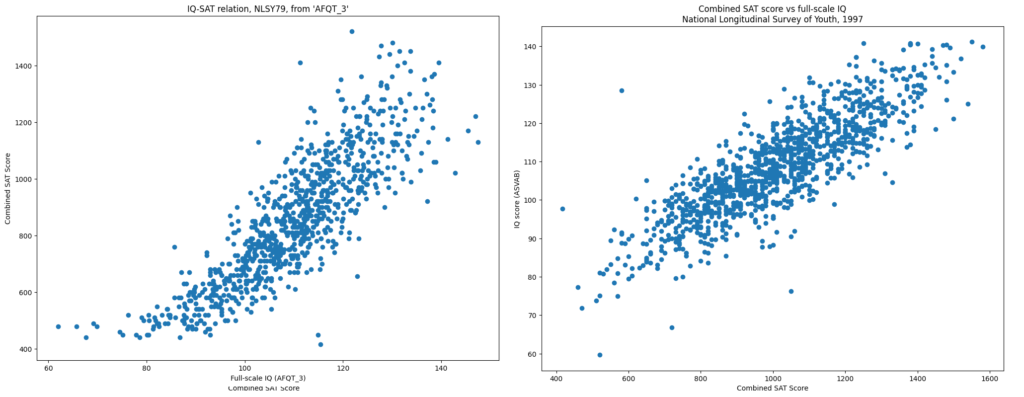

The answer is unequivocally yes. Frey and Detterman (2004) found that measures of g derived from the Armed Services Vocational Aptitude Battery (ASVAB) were correlated with SAT score at r = 0.86 among samples in the National Longitudinal Survey of Youth (NLSY) 1979. A further reanalysis by Crémieux not only reproduced the same result using the NLSY ’79 dataset but in fact revealed an even stronger and cleaner relationship in NLSY ’97. (The changes are likely attributable to less range-restriction on the ’97 ASVAB and the increasing commonality of taking the SAT, meaning that the population of SAT test-takers is less selected for high-g bias).

A brief aside: Because cognitive ability is predominantly measurable through a single factor, known as g, it is a priori reasonable to use a small number of standardized exam scores (such as SAT Critical Reading and SAT Mathematics subscores) to quantify a given student’s cognitive abilities. Were this not the case, then it might be reasonable to suggest that we need a more holistic approach, where we measure a large cornucopia of different abilities. However, because all cognitive tests seem to be essentially downstream of individual variation in g, it suffices to have one or two metrics of intellectual ability. Additionally, because practical constraints in examination length mean that there is a tradeoff between the “quality” of the given subtest (precision, length, range, versus the number of subtests given, the existence of g means that the information-maximizing choice is set at a fairly low number of in-depth subtests.

The correspondence between academic achievement and cognitive ability has also been explicitly tested numerous times. Notably, Kaufman et al. (2012) studies the latent factors extractable from batteries of academic and cognitive tests, finding that so-called “academic g” and “cognitive g” correlate at r = 0.83. (In actuality, there is only one g, cognitive g, so the terminology here is a slight abuse of notation.) A number of other cohorts have also been studied, although it is important to note that when the test subjects are too young, the heritability of their cognitive abilities is still relatively low (environmental effects have yet to “wash away” and genetic potential has yet to “manifest”), likely introducing a great degree of noise into the calculated correlations. That is precisely one advantage of the analysis performed by Kaufman et al., who explicitly calculate the variation of the academic/cognitive g correlation over time (and find that it increases, in fact, to a value >0.9).

Test scores are unaffected by parental income or education

The reader might object that just because g is correlated with SAT scores, the possibility remains that both are simply downstream of socioeconomic privilege (as reflected by parental income, wealth, or educational status). It is well understood that, cross-sectionally, higher socioeconomic status (SES) is strongly correlated with higher test scores on both standardized exams like the SAT and explicit IQ tests. Of course, just looking cross-sectionally, it is not obvious that which way the causation lies, i.e., whether children do better because their parents are rich, thereby leading to a favorable educational environment with tutors and leisure time, or because their parents are smart first and rich second, leading to both higher incomes and genetic transmission of intelligence.

Given the tight correlation of r = 0.85 or higher between g and SAT scores, as well as the known heritability of cognitive ability at >80%, it seems relatively implausible for differences in SAT scores to be largely causally downstream of differences in parental SES. However, to remove all doubt, we may consult the results of a number of adoption studies, all of which show that an adopted child’s score on a test of intelligence is related to the educational attainment or cognitive ability of their biological parents but not of their adoptive parents:

- Skodak and Skeels (1949). Adoptee IQ is correlated >0.4 with biological parents’ education levels but <0.1 with adoptive parents’ education levels. (further discussion)

- McGue et al. (2007). Intelligence, as well as delinquency and drug use, are not significantly associated with adoptive parents’ socioeconomic status. However, all three outcomes are significantly correlated with parents’ SES for biological children. (further discussion)

- Loehlin et al. (2021). Among both the Texas and Colorado Adoption Projects, adoptee child IQ was significantly associated with their biological mother’s IQ at both ages 6 and 16, but was not associated with adoptive family SES at either age. (further discussion)

- Odenstad (2008). In a Swedish cohort, higher parental education was significantly associated with the IQ of biological children, but completely unrelated with the IQ of international adoptees. (further discussion)

These results suggest, in fact, that effectively none of the individual variation in IQ or SAT scores is causally downstream of either family environment or parental income and wealth. Otherwise, we would see some relationship between the cognitive performance of adoptees and the educational levels or incomes of their adoptive parents. The fact that we find no such relationship across multiple studies and different cohorts is therefore quite striking.

Finally, if socioeconomic status was causally upstream of test scores in a manner that was independent of parental intelligence, we would expect to see these differences manifest in the predictive validity of exam scores. For example, if SAT scores were purely determined by SES, we would expect that controlling for SES would attenuate the correlation between SAT scores and university grades to zero. However:

- Sackett et al. (2009), however, show that this is not true; in fact, controlling for SES only reduces the correlation between SAT scores and first-year grades from r = 0.47 to r = 0.44, a very modest effect size.

- Work by Higdem et al. (2016) further demonstrated that even after subdividing by ethnic group or sex, “the test–grade relationship is only slightly diminished when controlling for SES [and] SES is a substantially less powerful predictor of academic performance than both SAT and HSGPA.” Overall, the near-complete lack of SES to provide incremental predictive power for first-year university performance on top of SAT scores is a clear refutation of the idea that SES is a significant contributor to students’ performance on standardized exams, both in aggregate as well as within any specific racial subgroup or sex.

- An extraordinarily large (n = 143,606) study of University of California applicants by Sackett et al. (2012) again confirmed that SES only adds marginal predictive ability for freshman grades on top of SAT scores, whereas SAT scores contain predictive power for freshman grades independent of SES. Even more notably, in this sample, the raw correlation between parental socioeconomic status and SAT score was merely r = 0.22, i.e., less than 5% of the variation in SAT scores was explained by variation in parental income or wealth.

Yet again, the bulk of the evidence is consistent with a minimal at best contribution of parental wealth to performance on standardized exams (independent of shared cognitive ability between parent and child).

Interestingly, despite the wealth of evidence to the contrary, a number of popular articles continue to lambast standardized exams for perpetuating inequities in social class and economic wealth, e.g., CNBC: Rich students get better SAT scores—here’s why. It would be tragic if it were true that test scores were really purely reflections of preexisting privilege. We should be thankful that well-controlled adoption studies suggest otherwise.

Test preparation has a minimal effect on test scores

Adoption studies already supply a convincing case for the inability of parental wealth or family environment to exert meaningful effects on the results of IQ tests. However, one could argue that standardized exams like the SAT or ACT in particular are more susceptible to exam prep. Because exam prep costs both time and money, this would exacerbate preexisting socioeconomic differences in a manner unreflective of innate ability.

It’s worth noting that given the constellation of information above—IQ scores uncorrelated with adoptive parental environment, g heritable at over 80%, SAT scores correlated >0.8 with g, and so on—this new hypothesis seems especially unlikely. Both IQ scores and SAT scores are correlated with g at well over 0.8, so if parental environment does not meaningfully affect SAT scores, we should also expect that it does not meaningfully affect SAT scores. But let’s take a closer look.

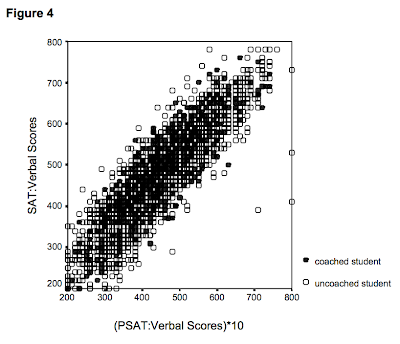

Briggs (2001) summarizes preexisting research into the effect of SAT-specific test preparation. Quoting Laird (1983), Becker (1990), and Powers and Rock (1999), he finds that the average coaching effect is typically estimated at around 10-20 points on either math or verbal sections. He also analyzes data from the National Education Longitudinal Survey of 1988 himself, finding that coaching results in a 15-point boost on the math section and a 7-point boost on the verbal section, which is barely visually discernible on a scatterplot:

The per-section standard deviation is typically around 110 points depending on subsection and administration year, so this amounts to a boost of under 0.1 standard deviations in total.

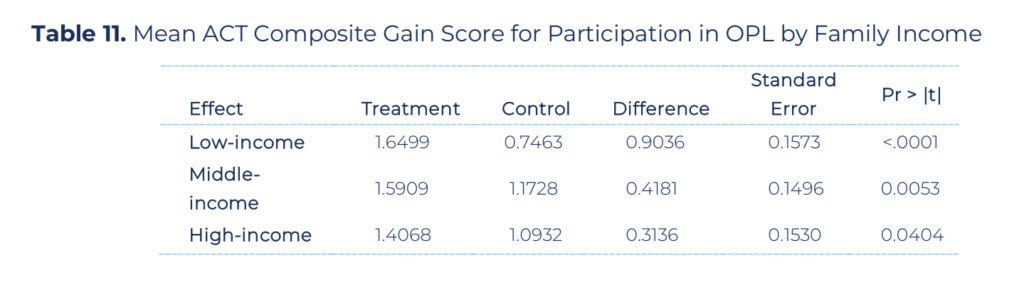

A modern, targeted intervention fares little better. Per a report by Sanchez and Harnisher (2018)—note that this research was jointly sponsored by the ACT and by Kaplan Test Prep, which are far from unbiased entities here—finds composite score gains of around 1.5 points across all income groups as a result of the Kaplan Online Prep Live program:

The standard deviation of the ACT is normalized to be 5 points, so a difference of 1.5 points corresponds to 0.3 standard deviations. This likely overestimates the true effect, because while a matched-control method was used, it is nevertheless the case that students who bother to enroll in the Kaplan prep program are slightly more intelligent and conscientious than students who do not.

Further academic work has continued to find relatively modest effects of test prep:

- Buchmann (2010) finds an effect of +0.15 standard deviations from commercial classes and +0.19 standard deviations from private tutors. Commentary from Jeff Kaufmann: “The authors seem to really want to find a larger effect so that they can claim that people who can’t afford coaching are at a larger disadvantage, so I would expect these numbers to be biased upward.”

- Moore, Sanchez, and San Pedro (2018) find, in a study commissioned by the ACT, that the effect of preparation is about +0.13 standard deviations (depending on the specific method used).

- Powers and Rock (1998) perform a large-scale study of a number of different commercial SAT coaching programs and estimate, again, very modest effect sizes (~10 point) with standard errors nearly as large.

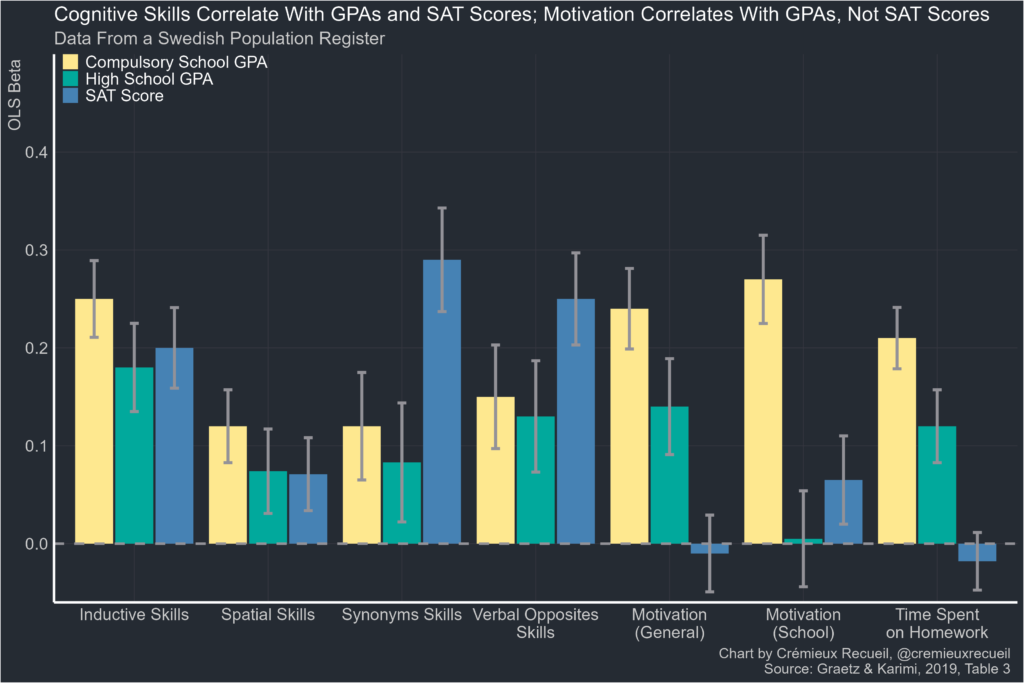

Students also effectively receive “preparation” for their standardized exams through the course of their normal schooling. If students are more motivated in school, they could conceivably end up more “prepped” for standardized exams in ways that are not reflective of intrinsic talent. However, Graetz and Karimi (2019) studied a cohort of Swedish students who took the Swedish SAT (note that this is different from the SAT administered to American students by the College Board), both general motivation and time spent on homework were only associated with higher grades, not with higher SAT scores, whereas intrinsic cognitive abilities were associated with both:

(Credit to the graph goes to Crémieux.)

In fact, spending more time on homework seems to be weakly associated with lower SAT scores. While initially counterintuitive, the result is in fact quite elegant; students with higher intrinsic abilities need to spend less time on homework, so higher homework time is an indirect signal of lower intelligence.

In general, we know that the majority of variance in standardized tests is explained by variation in intelligence, or g. However, a barrage of studies demonstrate that formal education has effectively zero effect on cognitive ability:

- Finn et al. (2013). “Random offers of enrollment to oversubscribed charter schools resulted in positive impacts of such school attendance on math achievement but had no impact on cognitive skills. These findings suggest that schools that improve standardized achievement-test scores do so primarily through channels other than improving cognitive skills.”

- Ritchie et al. (2013). “Controlling for childhood IQ score, we found that education was positively associated with IQ at ages 79 (Sample 1) and 70 (Sample 2), and more strongly for participants with lower initial IQ scores. Education, however, showed no significant association with processing speed, measured at ages 83 and 70. Increased education may enhance important later life cognitive capacities, but does not appear to improve more fundamental aspects of cognitive processing.”

- Carlsson et al. (2015). “To identify the causal effect of schooling on cognitive skills, we exploit conditionally random variation in the date Swedish males take a battery of cognitive tests in preparation for military service. We find an extra ten days of school instruction raises scores on crystallized intelligence tests (synonyms and technical comprehension tests) by approximately 1% of a standard deviation, whereas extra nonschool days have almost no effect. In contrast, test scores on fluid intelligence tests (spatial and logic tests) do not increase with additional days of schooling but do increase modestly with age.”

- Ritchie, Bates, and Deary (2015). “We conducted structural equation modeling on data from a large (n=1,091), longitudinal sample, with a measure of intelligence at age 11 years and 10 tests covering a diverse range of cognitive abilities taken at age 70. Results indicated that the association of education with improved cognitive test scores is not mediated by g, but consists of direct effects on specific cognitive skills. These results suggest a decoupling of educational gains from increases in general intellectual capacity.”

- Dahmann (2017). “First, I exploit the variation over time and across states to identify the effect of an increase in class hours on same-aged students’ intelligence scores, using data on seventeen year-olds from the German Socio-Economic Panel. Second, I investigate the influence of earlier instruction at younger ages, using data from the German National Educational Panel Study on high school graduates’ competences. The results show that, on average, neither instructional time nor age-distinct timing of instruction significantly improves students’ crystallized cognitive skills in adolescence.”

- Karwowski and Milersky (2021). “Using data collected during the recent educational reform introduced in Poland, this study examined whether schooling’s intensity relates to changes in cognitive abilities. […] However, after separate analyses for verbal and nonverbal intelligence, together with additional robustness checks, we conclude that the effect of more intensive schooling on cognitive growth was not systematic and quite unstable.”

- Lasker and Kirkegaard (2022). “We used the structural equation models from Ritchie, Bates & Deary (2015) on a longitudinal sample of over 4,000 American men who took an intelligence test near the end of high school and then took another around 37 years of age. Our results were consistent with theirs in that we found that the effect of education on intelligence test scores was not an improvement to intelligence itself, but instead was relegated to improvements to specific skills. Our results support the notion that education is not a source of enhanced intelligence, but it can help specific skills.”

Therefore, if we expect preparation or educational quantity/quality to affect exam scores, they must do so exclusively through the development of crystallized abilities that are independent of g (i.e., test-specific abilities). Because variation in non-g abilities only accounts for a minority of the variance in standardized exam scores, the ability of preparation, whether through explicit exam prep programs, private tutoring, or greater quantity/quality of general schooling, to affect exam scores is inherently limited. This is especially true on the margin: the differences in crystallized knowledge (mathematical ability, reading comprehension, etc.) between two students will be dominated largely by differences in their rate of growth over their entire educational and developmental history, which is largely a function of cognitive ability (g). Even a year-long after-school prep class is not realistically going to make up for a 50% higher growth rate over the last five years.

To be clear: standardized exams are in principle gameable. A fair amount of the content tested is composed of a finite set of concrete material which a student could theoretically “grind” or “memorize” without any underlying improvement in actual cognitive ability. There is a finite set of vocabulary words in total and a finite number of techniques needed to solve all math questions on any given exam. However, in practice, in the setting of actual reality where students cannot be easily locked up in isolation rooms and forced to memorize SAT vocabulary words with a gun pointed to their head, the effect of preparation, and indeed of education in general, is quite low.

To finish, we ought to note that even if test prep did have a substantial effect, any given student’s willingness to actually engage in extended prep, relative to other members of their cohort, is largely determined by their conscientiousness. This is a highly desirable personality trait which also governs, for example, how likely someone is to show up to work on time, and has been shown to be >75% heritable (see e.g. Takahashi et al. (2021)). That is to say, a great deal of the effectiveness of test preparation, if it were hypothetically effective, would still be mediated by innate, heritable, and largely immutable traits which educational institutions should want to actively select for.

Asian culture does not explain Asian-American exam outperformance

Even in light of the relative ineffectuality of exam preparation, some claim that “Asian culture” uniquely results in Asian-American outperformance well beyond what one would expect from their natural ability. It is well known that Asian culture emphasizes the value of education and hard work, and that Asian societies have long traditions of studying hard to succeed in meritocratic examinations. However, quantitative data does not support the presence of an “Asian culture effect.”

To begin with, we ought to note that cultures beyond East Asian ones value hard work. America was built upon Calvinism and the Protestant work ethic, and Jewish culture has long emphasized the value of academic and theological study. It is certainly not clear a priori that one would expect the “Asian culture effect” to be substantially stronger than these other cultural impulses. Furthermore, it is important to be careful here to isolate causal effects: parents which have higher g are also more likely to be heavily involved in their child’s education, to live in neighborhoods with other intelligent parents who also value education, etc., thereby explaining the cornucopia of assorted, weak effects previously found in relation to Asian-American families. (A summary is given by Kim, Cho, and Song (2019). Note that the studies reviewed are often low-quality and do not isolate causality well.)

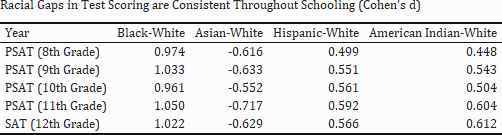

There are two convincing forms of evidence for the lack of an “Asian culture effect.” The first such type of evidence comes from Michigan, where students can take the (low-stakes) PSAT in grades 8 through 11 as well as a free (high-stakes) SAT in grade 12. Typically, SAT test-takers are selected for more intelligent or motivated students; this setting is therefore as close as we can reasonably get to a more uniform and representative sampling across different ethnicities.

In the presence of an “Asian culture effect,” we would expect to see the Asian-White gap grow over time, especially as students approach the higher-stakes SAT administration in the 12th grade. Presumably the bulk of students’ preparation occurs through the 8th to 12th grades; if Asian culture induced Asian students to study more, or more effectively, we would see this gap widen. However, there is no significant widening of the Asian-White gap in the Michigan PSAT/SAT data:

The second form of evidence comes from adoptee studies showing that Asian adoptees reared in non-Asian families continue to outperform non-Asians. For instance, Tan (2018) finds that “adopted Chinese youth outperformed their classmates on academic competence,” and because these children are reared in non-Chinese families, this cannot be attributable to Asian-family-specific cultural effects. This result is especially notable in light of the fact that adoptees are typically expected to perform worse, because the adoptee population is heavily adversely selected for genetic traits associated with poor academic and professional performance. A similar effect is found in Odenstad (2008), where Korean adoptees slightly outperform non-Korean international adoptees in Swedish families. (Note that in this latter study, we should keep in mind that Korean adoptees are a much less selected population than Asian-American immigrants, which are quite heavily filtered for traits correlated with intelligence.)

Both of these arguments are consistent with the existence of a fixed, intrinsic difference between the mean cognitive abilities of both groups. While they do not conclusively demonstrate that Asian outperformance is due to group differences in innate ability—certainly, any commenter who is motivated enough can easily come up with increasingly convoluted, magical, “god-of-the-gaps” hypotheses for the nature of the “Asian culture effect”—the evidence does not suggest that Asian outperformance is due to specific cultural factors.

A number of other findings are also consistent with the lack of an “Asian culture effect”:

- Byun and Park (2011) find that while East Asian-American students are more likely to engage commercial test prep services, “the impact of private tutoring was trivial for all racial/ethnic groups, including East Asian American students.”

- An analysis of U.S. Census data from Crémieux shows that the average income of Asian households is approximately the same in both urban and rural areas, suggesting that the propensity of Asian households to disproportionately live in high-density urban areas, which generally have higher wages than rural areas, does not explain their outperformance.

- When the actual parenting styles of Asian-Americans are measured, as by Sue and Okazaki (2009), it appears that Asian-American students come from families “high on authoritarian and permissive and low on authoritative characteristics” with “the lowest level of parental involvement among the groups studied.” These parental styles were generally associated with lower academic achievement: “The very characteristics associated with the Asian-American group predicted low academic achievement for all groups; yet, Asian-American students had higher levels of academic achievements.” While parenting style is complex and hard to fully quantify, a simple analysis certainly does not imply that “Asian-specific” parenting styles are responsible for boosting performance—if anything, Asian-American children are being held back!

In sum, intelligence is highly heritable; standardized exam scores are good measures of g; test prep does not meaningfully affect exam performance; finally, there is no evidence for the existence of an “Asian culture effect” boosting the academic achievement levels of Asian-American students. Therefore, we are left to conclude that Asian-American outperformance is reflective of a higher level of intrinsic ability.

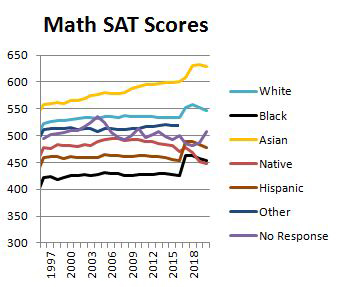

Some readers may find this surprising in light of the fact that Asian-American performance has been rising consistently throughout recent history. For example, from 1997 through 2018, SAT Math scores increased by around 80 points for Asian-Americans, a difference of >0.5 standard deviations; other racial groups showed more modest improvements in contrast.

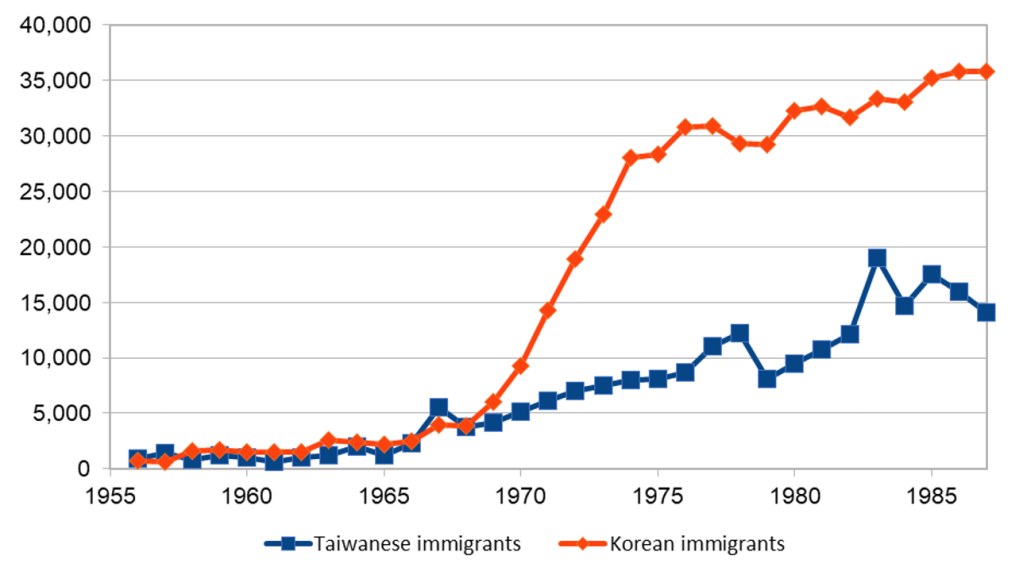

While it is hard to pinpoint the exact cause of this increase, it is actually evidence for the lack of an “Asian culture effect.” Naively, one might be tempted to attribute this rise to the increasing competitiveness of college admissions, resulting in the manifestation of higher levels of “Asian test prep grinding” and so on. But consider that in the United States, the “Asian” category originally included a population that was relatively unselected for intelligence, such as Chinese railroad workers, Pacific Islander immigrants, Vietnamese refugees, and so on. Only recently has the immigrant population become increasingly skewed toward intelligent, rich, and well-educated Asians, such as Taiwanese or, more recently, mainland Chinese Ph.D. students:

(Figure is from “Going to America”: An overview on Taiwanese Migration to the US, which cites Long and Huang (2002).)

The number of highly selected immigrants from East Asian countries has only increased in further years, and there are substantial lag times separating immigration, childbirth, and actual exam administration to their children; therefore recent patterns of immigration are entirely consistent with, and likely enough to fully explain, the consistent, multi-decade rise in Asian-American test scores on an aggregate level.

Given the heterogeneity of Asian immigrant inflow into the United States and the long timescales over which immigration happened (and continues to happen), as well as the extreme scarcity of detailed, high-quality data on the cognitive abilities of immigrant populations and their children, it is ultimately very challenging to say whether this is the root cause of the persistent, secular growth in Asian-American performance. However, one can argue that given preexisting knowledge that the filtering mechanisms restricting immigration are highly selective with respect to cognitive abilities, we might even be impelled to take this as a null hypothesis behind Asian-American outperformance. Regardless, though, the compelling nature of the “inflow quality hypothesis” cannot be denied.

Conclusion

Throughout this article, we have carefully developed a step-by-step argument which demonstrates the following points:

- Intelligence is a valid construct and can be represented by a single factor, g

- Intelligence is highly heritable and correlated with exam scores

- Exam scores are not meaningfully affected by parental environment, and only marginally affected by test prep

- There is no “Asian cultural effect” that clearly explains Asian-American outperformance, and such outperformance is very plausibly a reflective of innate group ability, which continues to rise with highly selected Asian immigration into America

Therefore, not only would elimination of standardized test scores be contrary to any goal of selecting students with intellectual talent; it is tantamount to open discrimination against Asian-Americans.

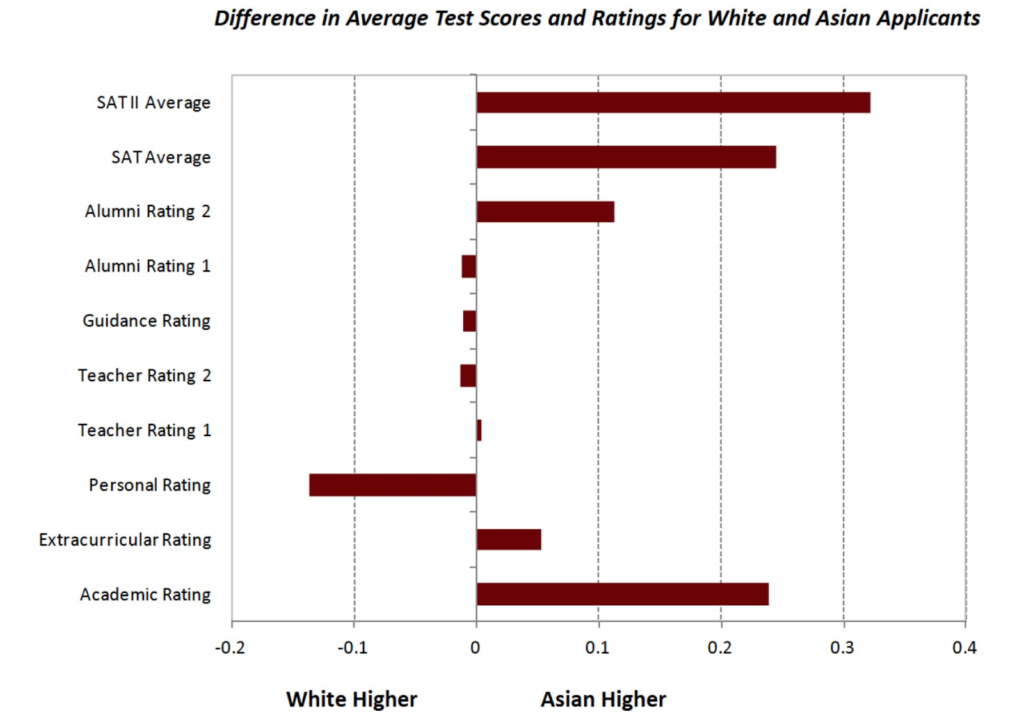

It is essentially “open season” on Asian-Americans in the American educational sphere. Explicit policies of discrimination against Asians have been an open secret for years:

The outright elimination of SAT, ACT, and other exams is only the latest volley in an assault on Asian-American equality which began in the late 1980s, as well documented by Ron Unz and currently litigated in University of North Carolina and Students for Fair Admissions v. Harvard University.

The pro-standardized exam argument has been made piecemeal in a number of different places (blog posts, articles, court filings, and so on). However, we hope that this post can serve as a comprehensive and complete argument which begins from the first step (validity of g as a construct) and, slowly, with detailed references to the established scientific literature, brings us inexorably through a series of conclusions terminating at the validity of standardized exams and, consequently, the nature of Asian-American outperformance. Thus far, we have seen no such document of its type in public; we hope this will serve as a useful contribution to the ongoing discourse on the usefulness of standardized testing in educational admissions.

References

- Bartholomew, D. J. Measuring Intelligence: Facts and Fallacies. (Cambridge University Press, 2004).

- Beaver, K. M. et al. The genetic and environmental architecture to the stability of IQ: Results from two independent samples of kinship pairs. Intelligence 41, 428–438 (2013).

- Bouchard, T. J. The Wilson Effect: The Increase in Heritability of IQ With Age. Twin Res Hum Genet 16, 923–930 (2013).

- Briggs, D. C. The Effect of Admissions Test Preparation: Evidence from NELS:88. CHANCE 14, 10–18 (2001).

- Buchmann, C., Condron, D. J. & Roscigno, V. J. Shadow Education, American Style: Test Preparation, the SAT and College Enrollment. Social Forces 89, 435–461 (2010).

- Byun, S. & Park, H. The Academic Success of East Asian American Youth: The Role of Shadow Education. Sociol Educ 85, 40–60 (2012).

- Carlsson, M., Dahl, G. B., Öckert, B. & Rooth, D.-O. The Effect of Schooling on Cognitive Skills. Review of Economics and Statistics 97, 533–547 (2015).

- Dahmann, S. C. How does education improve cognitive skills? Instructional time versus timing of instruction. Labour Economics 47, 35–47 (2017).

- Dalliard. Is Psychometric g a Myth? Human Varieties https://humanvarieties.org/2013/04/03/is-psychometric-g-a-myth/ (2013).

- Dalliard. Some Further Notes on g and Shalizi. Human Varieties https://humanvarieties.org/2013/04/14/some-further-notes-on-g-and-shalizi/ (2013).

- de Rooij, S. R., Wouters, H., Yonker, J. E., Painter, R. C. & Roseboom, T. J. Prenatal undernutrition and cognitive function in late adulthood. Proceedings of the National Academy of Sciences 107, 16881–16886 (2010).

- Editor. “Going to America”: An overview on Taiwanese Migration to the US. Taiwan Insight https://taiwaninsight.org/2021/06/15/going-to-america-an-overview-on-taiwanese-migration-to-the-us/ (2021).

- Elwood, K. Appeals court upholds Thomas Jefferson High School’s admission policy. Washington Post (2023).

- Finn, A. S. et al. Cognitive Skills, Student Achievement Tests, and Schools. Psychol Sci 25, 736–744 (2014).

- Frey, M. C. & Detterman, D. K. Scholastic Assessment or g?: The Relationship Between the Scholastic Assessment Test and General Cognitive Ability. Psychol Sci 15, 373–378 (2004).

- Gottfredson, L. S. Why g matters: The complexity of everyday life. Intelligence 24, 79–132 (1997).

- Gould, S. J. The Mismeasure of Man. (W. W. Norton & Company, 1981).

- Graetz, G. & Karimi, A. Explaining gender gap variation across assessment form.

- Higdem, J. L. et al. The Role of Socioeconomic Status in SAT-Freshman Grade Relationships Across Gender and Racial Subgroups: The Role of Socioeconomic Status. Educational Measurement: Issues and Practice 35, 21–28 (2016).

- Howe, L. J. et al. Within-sibship genome-wide association analyses decrease bias in estimates of direct genetic effects. Nat Genet 54, 581–592 (2022).

- Hsu, S. D. H. On the genetic architecture of intelligence and other quantitative traits. Preprint at http://arxiv.org/abs/1408.3421 (2014).

- Hur, Y.-M. & Bates, T. Genetic and Environmental Influences on Cognitive Abilities in Extreme Poverty. Twin Res Hum Genet 22, 297–301 (2019).

- Johnson, W., Nijenhuis, J. te & Bouchard, T. J. Still just 1 g: Consistent results from five test batteries. Intelligence 36, 81–95 (2008).

- jsmp. Taleb is wrong about IQ. archive.is https://archive.is/kzy5i (2023).

- Karwowski, M. & Milerski, B. Intensive schooling and cognitive ability: A case of Polish educational reform. Personality and Individual Differences 183, 111121 (2021).

- Kaufman, S. B., Reynolds, M. R., Liu, X., Kaufman, A. S. & McGrew, K. S. Are cognitive g and academic achievement g one and the same g? An exploration on the Woodcock–Johnson and Kaufman tests. Intelligence 40, 123–138 (2012).

- Kim, S. won, Cho, H. & Song, M. Revisiting the explanations for Asian American scholastic success: a meta-analytic and critical review. Educational Review 71, 691–711 (2019).

- Lasker, J. & Kirkegaard, E. O. W. The Generality of Educational Effects on Intelligence: A Replication.

- Lee, J. J. Review of intelligence and how to get it: Why schools and cultures count, R.E. Nisbett, Norton, New York, NY (2009). ISBN: 9780393065053. Personality and Individual Differences 48, 247–255 (2010).

- Lewis, J. E. et al. The Mismeasure of Science: Stephen Jay Gould versus Samuel George Morton on Skulls and Bias. PLoS Biol 9, e1001071 (2011).

- Loehlin, J. C., Corley, R. P., Reynolds, C. A. & Wadsworth, S. J. Heritability x SES interaction for IQ: Is it present in US adoption studies? (2023).

- Long W. & Huang G. 台灣及兩岸三地華人人口推估方法-理論構建與實證探討(以美國為例). (Zhonghua Minguo qiao wu wei yuan hui, 91).

- McGue, M. et al. The Environments of Adopted and Non-adopted Youth: Evidence on Range Restriction From the Sibling Interaction and Behavior Study (SIBS). Behav Genet 37, 449–462 (2007).

- Moore, R. Investigating Test Prep Impact on Score Gains Using Quasi-Experimental Propensity Score Matching.

- Nisbett, R. E. Intelligence and How to Get It: Why Schools and Cultures Count. (W. W. Norton & Company, 2010).

- Odenstad, A. et al. Does age at adoption and geographic origin matter? A national cohort study of cognitive test performance in adult inter-country adoptees. Psychol. Med. 38, 1803–1814 (2008).

- Panizzon, M. S. et al. Genetic and environmental influences on general cognitive ability: Is g a valid latent construct? Intelligence 43, 65–76 (2014).

- Pesta, B. J., Kirkegaard, E. O. W., Te Nijenhuis, J., Lasker, J. & Fuerst, J. G. R. Racial and ethnic group differences in the heritability of intelligence: A systematic review and meta-analysis. Intelligence 78, 101408 (2020).

- Pesta, B. J., Te Nijenhuis, J., Lasker, J., Kirkegaard, E. O. W. & Fuerst, J. G. R. On group differences in the heritability of intelligence: A reply to Giangrande and Turkheimer (2022). Intelligence 98, 101737 (2023).

- Powers, D. E. & Rock, D. A. Effects of Coaching on SAT® I: Reasoning Scores. ETS Research Report Series 1998, i–17 (1998).

- Rask-Andersen, M., Karlsson, T., Ek, W. E. & Johansson, Å. Modification of Heritability for Educational Attainment and Fluid Intelligence by Socioeconomic Deprivation in the UK Biobank. AJP 178, 625–634 (2021).

- Ritchie, S. Intelligence: All That Matters. (Teach Yourself, 2016).

- Ritchie, S. J., Bates, T. C. & Deary, I. J. Is education associated with improvements in general cognitive ability, or in specific skills? Developmental Psychology 51, 573–582 (2015).

- Ritchie, S. J., Bates, T. C., Der, G., Starr, J. M. & Deary, I. J. Education is associated with higher later life IQ scores, but not with faster cognitive processing speed. Psychology and Aging 28, 515–521 (2013).

- Sackett, P. R., Kuncel, N. R., Arneson, J. J., Cooper, S. R. & Waters, S. D. Does socioeconomic status explain the relationship between admissions tests and post-secondary academic performance? Psychological Bulletin 135, 1–22 (2009).

- Sackett, P. R. et al. The Role of Socioeconomic Status in SAT-Grade Relationships and in College Admissions Decisions. Psychol Sci 23, 1000–1007 (2012).

- Sanchez, E. The Impact of ACT Kaplan Online Prep Live on ACT Score Gains. (2018).

- Schwabe, I., Janss, L. & van den Berg, S. M. Can We Validate the Results of Twin Studies? A Census-Based Study on the Heritability of Educational Achievement. Front. Genet. 8, 160 (2017).

- SEAN. Nassim Taleb on IQ. Ideas and Data https://ideasanddata.wordpress.com/2019/01/08/nassim-taleb-on-iq/ (2019).

- Shalizi, C. g, a Statistical Myth. Three-Toed Sloth http://bactra.org/weblog/523.html (2007).

- Skodak, M. & Skeels, H. M. A Final Follow-Up Study of One Hundred Adopted Children. The Pedagogical Seminary and Journal of Genetic Psychology 75, 85–125 (1949).

- Sloan, K. & Sloan, K. ABA will try yet again to eliminate LSAT rule. Reuters (2023).

- Spearman, C. The abilities of man. xxiii, 415 (Macmillan, 1927).

- Sue, S. & Okazaki, S. Asian-American Educational Achievements: A Phenomenon in Search of an Explanation. The American psychologist 45, 913–20 (1990).

- Taleb, N. N. IQ is largely a pseudoscientific swindle. INCERTO https://medium.com/incerto/iq-is-largely-a-pseudoscientific-swindle-f131c101ba39 (2019).

- Takahashi, Y., Zheng, A., Yamagata, S. & Ando, J. Genetic and environmental architecture of conscientiousness in adolescence. Sci Rep 11, 3205 (2021).

- Tan, T. X. Model minority of a different kind? Academic competence and behavioral health of Chinese children adopted into White American families. Asian American Journal of Psychology 9, 169–178 (2018).

- Turkheimer, E., Haley, A., Waldron, M., D’Onofrio, B. & Gottesman, I. I. Socioeconomic Status Modifies Heritability of IQ in Young Children. Psychol Sci 14, 623–628 (2003).

- van Beijsterveldt, C. E. M. et al. Chorionicity and Heritability Estimates from Twin Studies: The Prenatal Environment of Twins and Their Resemblance Across a Large Number of Traits. Behav Genet 46, 304–314 (2016).

- Vinkhuyzen, A. A. E., van der Sluis, S., Maes, H. H. M. & Posthuma, D. Reconsidering the Heritability of Intelligence in Adulthood: Taking Assortative Mating and Cultural Transmission into Account. Behav Genet 42, 187–198 (2012).

- Warne, R. T., Burton, J. Z., Gibbons, A. & Melendez, D. A. Stephen Jay Gould’s Analysis of the Army Beta Test in The Mismeasure of Man: Distortions and Misconceptions Regarding a Pioneering Mental Test. J. Intell. 7, 6 (2019).

- Watanabe, T. UC slams the door on standardized admissions tests, nixing any SAT alternative. Los Angeles Times https://www.latimes.com/california/story/2021-11-18/uc-slams-door-on-sat-and-all-standardized-admissions-tests (2021).

- Yang, J., Lee, S. H., Goddard, M. E. & Visscher, P. M. GCTA: A Tool for Genome-wide Complex Trait Analysis. The American Journal of Human Genetics 88, 76–82 (2011).

- Yengo, L. et al. A saturated map of common genetic variants associated with human height. Nature 610, 704–712 (2022).

- Young, A. I. et al. Mendelian imputation of parental genotypes improves estimates of direct genetic effects. Nat Genet 54, 897–905 (2022).

- Willoughby, E. A., McGue, M., Iacono, W. G. & Lee, J. J. Genetic and environmental contributions to IQ in adoptive and biological families with 30-year-old offspring. Intelligence 88, 101579 (2021).

- Wolfram, T. (Not just) Intelligence stratifies the occupational hierarchy: Ranking 360 professions by IQ and non-cognitive traits. Intelligence 98, 101755 (2023).

- Zisman, C. & Ganzach, Y. The claim that personality is more important than intelligence in predicting important life outcomes has been greatly exaggerated. Intelligence 92, 101631 (2022).

May 25th, 2023 at 4:06 am

Excellent article!

May 25th, 2023 at 4:36 am

good article bro

May 25th, 2023 at 6:07 pm

Assuming that heritability is high, the idea of allowing leniency for lower scoring students is more understandable. Social stratification would be inevitable if every student was accepted solely for academic performance. That’s why it’s hard to buy the “discrimination” vs Asians rhetoric. In some sense it is, but one could also counter that you are discriminating against other groups due to things they can’t control (genes) etc.

Another critique I have is with idea that environment doesn’t matter. That’s obviously ridiculous. Simply look at every case of a feral child and you will realize it matters. Instead you should simply mention that hey once you reach a threshold of suitable environmental conditions, additional enhancements to your environment don’t seem to increase cognitive ability by much.

And in regards to the argument vs GWAS. It’s a fallacy to say that oh even though within family heritability is at 20% it will eventually reach over 50%. It’s also plausible that it won’t and you can’t simply say it will just get increase to the extent of the twin studies.

I have a mixed opinion on IQ. As a predictor it’s really not as strong as people say. I also believe that the basic conception that people colloquially have of intelligence is only somewhat correlated with the g factor. But in general I think there are differences in IQ no doubt about that. Some are predisposed to being smart and others not so smart. For me I am just not sure about how strong the genetic effect is. Is it 50%, 70%, or maybe less? And how does one deal with all the confounding. It’s always hard to digest info from the “race realist” communities because they are usually quite biased and hostile towards criticisms. Same with the anti race realist crowd. You guys just constantly block each other on Twitter and hold yourself in your own respective echo chambers. It would be cool for instance if someone wrote a blog in response to this one who had an opposing position. Like a proper dialogue you know?

Anyway, good article man!

May 25th, 2023 at 11:05 pm

@Dave:

> And in regards to the argument vs GWAS. It’s a fallacy to say that oh even though within family heritability is at 20% it will eventually reach over 50%. It’s also plausible that it won’t and you can’t simply say it will just get increase to the extent of the twin studies.

It doesn’t seem like a fallacy? This is how it worked for, say, height.

May 26th, 2023 at 6:27 pm

@milkyeggs:

> It doesn’t seem like a fallacy? This is how it worked for, say, height

Yes it may have been that way with height but that does not necessitate that the same will follow for IQ. If 10 years later with larger sample sizes and higher quality data the heritability still doesn’t seem to be reaching very high for GWAS on IQ, then one could simply say “we just need even more data” or “it will go up just like it did with height”. Thus there is circularity. All we have is the current data that suggests 20% heritability and they have been conducting these studies for many years. More concrete evidence is needed. We can’t assuming the missing heritability rate will simply reappear. Even if some of it does it might never match the rates seen in classical twin studies which could suggest we missed something

May 27th, 2023 at 3:14 pm

While there certainly is anti-asian discrimmination it isn’t obvious to me that they are relatively more discriminated against than whites. This report finds that SAT only admissions would increase the percentage of whites and decrease the share of asians at top 200 colleges.

https://cew.georgetown.edu/cew-reports/satonly/

May 30th, 2023 at 2:55 pm

Typo:

“Both IQ scores and SAT scores are correlated with g at well over 0.8, so if parental environment does not meaningfully affect SAT scores, we should also expect that it does not meaningfully affect SAT scores. But let’s take a closer look.”

May 30th, 2023 at 3:45 pm

“It is worth noting that the existence of g is not obvious a priori. For athletics, for instance, there is no intuitively apparent “a factor” which explains the majority of the variation in all domains of athleticism. While many sports do end up benefiting from the same traits, in certain cases, different types of athletic ability may be anticorrelated: for instance, the specific body composition and training required to be an elite runner will typically disadvantage someone in shotput or bodybuilding. However, when it comes to cognitive ability, no analogous tradeoffs are known.”

I’m not sure I agree with this. You don’t think what we intuitively call “coordination”–the ability to use different parts of the body together smoothly and efficiently–constitutes a general factor of athleticism? Even something like long distance running benefits from coordination to maintain good running form: a relaxed, rhythmic swaying of the arms, an upright posture, and an even stride cadence.

Looking at body composition (e.g. height or muscularity) to determine athleticism is a red herring.

I think the general factor of athleticism is obscured somewhat by selection effects. In adulthood, people settle into the level and type of athletic leisure that’s most comfortable to them. But in unselected settings, there seems to me to be large differences in general athletic ability. Anecdotally: in elementary and middle school P.E class (the last time I was in an athletic setting with a representive sample of the general population), there were *large* correlations between who were good at the different activities. The same kids who were good at foursquare were also good at short sprints were also good at kickball, etc.

Okay, so I tried to briefly look up some scientific literature to back up my claim of the existence of a general factor of athleticism, and the general factor seems… to not exist?

Firstly, I am struck by how little research there is into the statistical factors that underpin athleticism. Lots of qualitative sports science about “motor skill transfer” and “physical literacy” (the last one is an actual term–really), but why don’t you just throw a battery of test into R?

For example, I looked up “presidential fitness test factor analysis” expecting an avalanche of results. It’s certainly the first thing I would study if I were a sport scientist. But I barely got anything.

What few search results I did see support the notion that athleticism isn’t quite unitary. Traits like explosiveness, endurance, and fine motor control seem to vary independently. So that provides evidence for your claim that the unitarity of g is actually a surprising statement about reality and not a statistical tautology.

May 31st, 2023 at 5:59 am

If intelligence is ~80% genetic, not affected by culture, parents background or test preparation, then what accounts for the ~20 % variability that is not hereditary? 20% is not insignificant, and could still explain a large share of intergroup differences.

(I know I’m conflating g and exam scores here, but that seems fair given the close relationship found in this piece.)

May 31st, 2023 at 9:19 pm

The elimination of standardized tests from more accessible, but prestigious, state institutions is a plot by ‘people’ (I use the term very losely) who went to Ivies to knock down their more impartially selected competitors. In the same way those same cretins destroyed grammar schools in the UK.

June 7th, 2023 at 11:12 am

Have you seen this paper?